Justice: What is the Right Thing to Do? ౼ Article Plan (Based on 12/03/2025 Information)

Today, March 12, 2025, AI profoundly impacts justice systems globally, demanding ethical consideration and responsible implementation, as championed by Uthra Sridhar and UNESCO initiatives.

The concept of justice is undergoing a rapid transformation, propelled by the pervasive integration of artificial intelligence (AI) into societal structures. No longer confined to traditional legal frameworks, justice now intersects with complex algorithms influencing decisions across finance, employment, and crucially, the judicial system. This evolution, noted on March 12, 2025, necessitates a re-evaluation of fundamental principles.

AI’s presence isn’t limited to simple automation; it extends to predictive policing, legal research, and even courtroom procedures. UNESCO, recognizing this shift – highlighted at the February 19th, 2025 event in Kigali – is actively equipping judicial actors with the tools to navigate this new terrain. Advocates like Uthra Sridhar emphasize responsible AI implementation, acknowledging both its potential and inherent risks. The landscape demands a proactive, ethical approach to ensure fairness and equity.

The Core Question: Defining “The Right Thing”

Defining “the right thing” in the context of justice becomes increasingly complex with the advent of AI. Traditionally rooted in philosophical and ethical considerations, justice now faces algorithmic interpretations. As of March 12, 2025, the question isn’t simply about legal correctness, but about fairness, bias, and accountability within AI systems.

The integration of AI, as observed through UNESCO’s initiatives and Uthra Sridhar’s advocacy, forces us to confront whether algorithmic outcomes truly represent just results. Can an algorithm, however sophisticated, embody the nuanced understanding of human circumstances essential for equitable judgment? The core challenge lies in ensuring AI serves justice, rather than dictating it, demanding careful scrutiny and ethical guidelines.

Historical Perspectives on Justice

Historically, conceptions of justice have evolved dramatically. Ancient philosophers like Plato and Aristotle laid foundational principles, emphasizing virtue and societal harmony. Later, Enlightenment thinkers, including Kant, focused on universal moral laws and individual rights. These perspectives shaped modern legal systems, yet today’s AI integration presents a novel challenge.

Considering these historical roots is crucial as we navigate AI’s impact. The question of “the right thing” isn’t new, but the tools for determining it are. As of March 12, 2025, the debate echoes past philosophical inquiries, now amplified by the potential for algorithmic bias and the need for responsible AI, as highlighted by advocates like Uthra Sridhar and UNESCO’s work.

3.1 Ancient Philosophies: Plato & Aristotle

Plato, in The Republic, envisioned justice as harmony within the soul and state, achieved through reason and a hierarchical social structure. Aristotle, his student, focused on distributive and corrective justice, emphasizing fairness in allocation and rectification of wrongs. Both believed justice was intrinsically linked to virtue and the pursuit of the “good life.”

These ancient views provide a crucial backdrop to today’s discussions on AI and justice. While AI can analyze data for fairness, it lacks the inherent moral compass these philosophers emphasized. As of March 12, 2025, understanding their frameworks is vital when considering algorithmic bias and ensuring AI serves, rather than undermines, true justice.

3.2 Enlightenment Era & Kantian Ethics

The Enlightenment shifted focus to individual rights and reason, profoundly influencing justice concepts. Immanuel Kant’s categorical imperative – acting only according to principles universalizable without contradiction – provided a deontological framework. Justice, for Kant, wasn’t about outcomes but about duty and respecting the inherent dignity of all rational beings.

This contrasts with purely consequentialist views. Considering AI’s role, Kantian ethics stresses the importance of transparency and explainability. As of March 12, 2025, algorithms must be justifiable based on universal principles, not simply optimized for efficiency, to align with Enlightenment ideals of fairness and respect.

Modern Justice Systems: A Global Overview

Contemporary justice systems exhibit diverse structures, broadly categorized into Common Law (precedent-based, evolving through judicial decisions – think US, UK) and Civil Law (codified laws, legislative focus – prevalent in continental Europe). These systems grapple with similar challenges: ensuring fairness, efficiency, and access.

International Law, including treaties and conventions, adds another layer, addressing transnational crimes and human rights. As of March 12, 2025, AI’s integration impacts both traditions, raising questions about algorithmic bias and due process. UNESCO’s work, highlighted in Kigali, underscores the need for global cooperation in navigating this transformation.

4.1 Common Law vs. Civil Law Traditions

Common Law systems, originating in England, rely heavily on judicial precedent – “stare decisis” – building legal principles through case-by-case rulings. This fosters adaptability but can lead to complexity. Conversely, Civil Law systems, rooted in Roman law, prioritize comprehensive legal codes enacted by legislatures. They emphasize systematic organization and predictability.

The integration of Artificial Intelligence presents unique challenges to each. Common Law’s reliance on nuanced interpretation requires AI capable of contextual understanding, while Civil Law’s codified nature demands AI adept at precise application of rules. Both systems, as of March 12, 2025, are exploring AI’s potential.

4.2 The Role of International Law

International Law, encompassing treaties, customary practices, and general principles, increasingly intersects with domestic justice systems. As of March 12, 2025, its role is amplified by global challenges like cross-border crime and human rights violations. AI’s application in this sphere is nascent but promising, particularly in analyzing vast datasets to identify patterns of international offenses.

UNESCO’s work, highlighted in the February 2025 Kigali meeting, emphasizes equipping judicial actors to navigate AI’s impact on international legal frameworks. Challenges include ensuring AI algorithms respect diverse legal traditions and upholding principles of sovereignty while facilitating international cooperation in justice administration.

The Rise of Artificial Intelligence in Justice

Artificial Intelligence (AI) is no longer a futuristic concept but a present reality, deeply influencing core societal systems, including justice. As of March 12, 2025, its integration extends beyond simple automation to complex decision-support tools. This shift necessitates a critical examination of ethical implications and potential biases.

The emergence of AI in justice isn’t limited to tools like ChatGPT for document summarization; it encompasses applications impacting fundamental legal processes. Uthra Sridhar’s advocacy highlights the urgent need for responsible AI deployment, while UNESCO’s initiatives, demonstrated in Kigali (February 2025), focus on equipping judicial actors for this transformation.

AI Applications in the Judiciary

AI’s presence within the judiciary is rapidly expanding, manifesting in diverse applications. Predictive policing, while promising, raises concerns about algorithmic bias and fairness – a critical area demanding scrutiny. Simultaneously, AI-powered legal research and document review tools are streamlining workflows, enhancing efficiency for legal professionals.

These advancements, noted as of March 12, 2025, aren’t merely about speed; they represent a fundamental shift in how legal processes are conducted. Uthra Sridhar’s work emphasizes the importance of responsible implementation, while UNESCO’s February 2025 Kigali event focused on equipping judges with the necessary skills to navigate this evolving landscape.

6.1 Predictive Policing & Algorithmic Bias

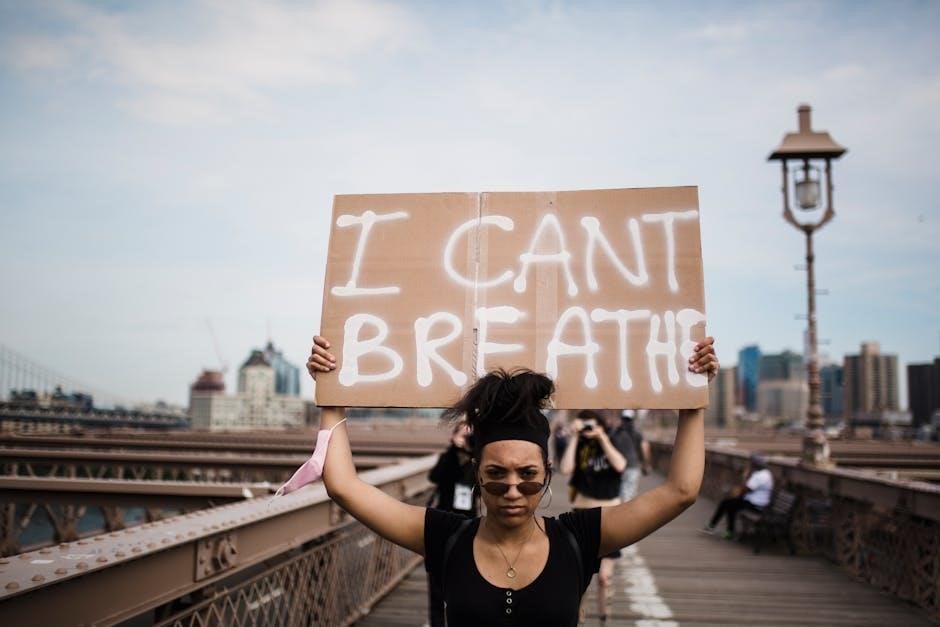

Predictive policing, leveraging AI to forecast crime hotspots, presents a double-edged sword. While potentially enhancing resource allocation, it’s deeply susceptible to algorithmic bias, perpetuating existing societal inequalities. Data reflecting historical biases in policing can lead to disproportionate targeting of marginalized communities, reinforcing systemic injustice.

As of March 12, 2025, this remains a significant ethical concern. Uthra Sridhar’s advocacy highlights the necessity for transparency and rigorous testing of these algorithms. UNESCO’s discussions in Kigali (February 2025) underscored the need for judicial actors to understand and mitigate these biases, ensuring fairness and equity in AI-driven law enforcement.

6.2 AI-Powered Legal Research & Document Review

AI is revolutionizing legal research and document review, dramatically increasing efficiency for legal professionals. These tools can swiftly analyze vast datasets, identifying relevant precedents and key information within complex legal documents – tasks previously consuming significant time and resources. This capability extends beyond simple searches, offering nuanced analysis and pattern recognition.

However, reliance on AI necessitates careful consideration. As of March 12, 2025, ensuring accuracy and avoiding algorithmic limitations are crucial. While AI excels at processing data, human oversight remains vital for contextual understanding. The advancements discussed at the UNESCO event in Kigali (February 2025) emphasize responsible integration of these technologies.

UNESCO’s Role in AI & Judicial Transformation (Kigali, Feb 2025)

At the 3rd Annual EACJ Judicial Conference in Kigali, February 19, 2025, UNESCO positioned itself as a central facilitator in navigating the integration of Artificial Intelligence within judicial systems. Recognizing AI’s transformative potential – and inherent risks – UNESCO focused on equipping judicial actors with the necessary tools and knowledge.

The organization’s efforts centered on fostering responsible AI implementation, emphasizing ethical considerations and capacity building. Discussions highlighted the need for judicial professionals to understand AI’s capabilities and limitations, ensuring fairness and transparency. This initiative aligns with broader global conversations, including advocacy from figures like Uthra Sridhar, promoting responsible AI in justice.

Ethical Considerations of AI in Justice

The integration of Artificial Intelligence into justice systems raises critical ethical dilemmas. Paramount among these is ensuring transparency and explainability of algorithms; “black box” AI decisions undermine due process and public trust. Accountability also emerges as a key concern – who is responsible when an AI system makes an erroneous or biased judgment?

Furthermore, the potential for algorithmic bias demands careful scrutiny. AI systems trained on biased data can perpetuate and amplify existing societal inequalities. Addressing these challenges requires robust oversight, ongoing evaluation, and a commitment to fairness, echoing the advocacy of experts like Uthra Sridhar.

8.1 Transparency & Explainability of Algorithms

A core ethical imperative in AI-driven justice is algorithmic transparency. “Black box” AI, where decision-making processes are opaque, erodes trust and hinders effective legal challenge. Explainability – the ability to understand why an AI reached a specific conclusion – is crucial for ensuring fairness and accountability.

Without transparency, identifying and mitigating biases becomes exceedingly difficult. Legal professionals and the public must be able to scrutinize the logic behind AI judgments. This necessitates developing techniques for interpreting complex algorithms and presenting their reasoning in an accessible manner, aligning with responsible AI principles advocated by figures like Uthra Sridhar.

8.2 Accountability & Responsibility for AI Decisions

Establishing clear lines of accountability is paramount when AI systems contribute to legal outcomes. If an AI algorithm makes an erroneous or biased decision, determining who is responsible – the developer, the deploying institution, or the AI itself – presents a significant challenge. Current legal frameworks often struggle to address this novel situation.

Responsibility cannot simply be delegated to the AI. Human oversight and the ability to override AI decisions are essential safeguards. UNESCO’s work, highlighted in the Kigali meeting (February 2025), emphasizes equipping judicial actors to navigate these complexities, ensuring that AI serves justice, not undermines it, as championed by advocates like Uthra Sridhar.

AI and Access to Justice

Artificial Intelligence holds immense potential to democratize access to justice, traditionally hampered by cost and logistical barriers. AI-powered tools can significantly reduce expenses associated with legal research, document review, and preliminary case assessment, making legal assistance more affordable for a wider population.

Furthermore, AI can bridge the justice gap for marginalized communities by providing automated translation services, simplifying complex legal language, and offering preliminary legal guidance. As noted on March 12, 2025, these advancements align with UNESCO’s focus on equipping judicial actors, as advocated by Uthra Sridhar, to leverage AI for equitable outcomes.

9.1 Reducing Costs & Improving Efficiency

AI’s capacity to automate repetitive and time-consuming legal tasks directly translates to reduced costs within justice systems. Processes like e-discovery, document summarization, and initial case filing can be streamlined, freeing up legal professionals to focus on more complex aspects of cases. This efficiency gain, highlighted as of March 12, 2025, isn’t merely about saving money; it’s about optimizing resource allocation.

By handling routine tasks, AI allows courts and legal aid organizations to serve a larger number of individuals, improving overall system efficiency and responsiveness. This aligns with Uthra Sridhar’s advocacy and UNESCO’s initiatives to modernize judicial processes.

9.2 Bridging the Justice Gap for Marginalized Communities

A significant barrier to justice is accessibility, particularly for marginalized communities facing financial constraints or geographical limitations. AI-powered tools, as discussed on March 12, 2025, offer potential solutions by providing affordable or even free legal information and assistance. AI chatbots can offer preliminary legal guidance, and automated document generation can simplify complex processes.

These technologies, championed by advocates like Uthra Sridhar and supported by UNESCO’s work, can help level the playing field, ensuring that vulnerable populations have access to the legal system. This is crucial for upholding principles of fairness and equity within justice frameworks.

Challenges to Implementing AI in Legal Systems

Despite the promise of AI in justice, significant hurdles exist. Data privacy and security are paramount concerns, as legal data is highly sensitive. Ensuring robust safeguards against breaches and misuse is critical, as highlighted on March 12, 2025. Furthermore, resistance to change from legal professionals, accustomed to traditional methods, poses a challenge.

Overcoming this requires comprehensive training and demonstrating the benefits of AI tools. Concerns about algorithmic bias and the need for transparency, as emphasized by Uthra Sridhar and UNESCO, also demand careful attention during implementation to maintain public trust.

10.1 Data Privacy & Security Concerns

Legal datasets contain profoundly sensitive personal information, making data privacy and security paramount when integrating AI. Breaches could severely compromise individual rights and undermine the justice system’s integrity. Robust encryption, access controls, and anonymization techniques are essential, as noted on March 12, 2025.

Furthermore, the potential for misuse of data – even without a breach – raises ethical concerns. Clear regulations and oversight mechanisms are needed to prevent discriminatory practices or unauthorized surveillance. Addressing these concerns is vital for building public trust in AI-driven justice solutions, aligning with Uthra Sridhar’s advocacy.

10.2 Resistance to Change from Legal Professionals

Implementing AI in legal systems often faces resistance from professionals accustomed to traditional methods. Concerns about job displacement, the perceived “black box” nature of algorithms, and a general skepticism towards technology are common hurdles, as of March 12, 2025.

Effective change management strategies are crucial, including comprehensive training programs and demonstrating AI’s potential to augment rather than replace human expertise. Addressing fears and fostering collaboration between legal professionals and AI developers is vital. UNESCO’s initiatives, highlighted in Kigali on February 19, 2025, emphasize equipping judicial actors for this transformation.

Uthra Sridhar’s Advocacy for Responsible AI in Justice

Uthra Sridhar is a leading voice championing the ethical deployment of Artificial Intelligence within justice systems, as recognized on March 12, 2025. Her work emphasizes the critical need for transparency, accountability, and fairness in algorithmic decision-making.

Sridhar advocates for proactive measures to mitigate bias in AI, ensuring equitable access to justice for all communities. She stresses that AI should serve to enhance, not undermine, fundamental legal principles. Her advocacy aligns with UNESCO’s efforts, showcased at the February 19th Kigali event, to equip judicial actors with the tools for responsible AI integration.

The Impact of AI on Employment in the Legal Field

As of March 12, 2025, the integration of Artificial Intelligence is reshaping the legal employment landscape. While concerns about job displacement exist, the reality is more nuanced. AI is automating tasks like legal research and document review – functions previously handled by junior associates and paralegals.

However, this automation also creates opportunities. Legal professionals will increasingly focus on higher-level strategic thinking, client interaction, and ethical oversight of AI systems. The demand for skills in AI ethics, data analysis, and algorithmic auditing is rising, necessitating upskilling and adaptation within the legal workforce.

AI and the Future of Legal Education

Reflecting the current state on March 12, 2025, legal education is undergoing a significant transformation driven by AI. Traditional curricula are evolving to incorporate courses on AI ethics, algorithmic bias, and the legal implications of AI technologies. Law schools are recognizing the need to equip future lawyers with the skills to navigate this changing landscape.

Furthermore, AI-powered tools are being integrated into legal education for tasks like legal research, case analysis, and contract drafting. This prepares students for the practical application of AI in their future careers. The focus is shifting towards fostering critical thinking, problem-solving, and responsible AI implementation.

Bias in AI Algorithms: Sources and Mitigation Strategies

As of March 12, 2025, a critical concern surrounding AI in justice is algorithmic bias. These biases stem from skewed training data reflecting existing societal inequalities, leading to discriminatory outcomes. Sources include historical data, flawed assumptions in algorithm design, and underrepresentation of certain groups.

Mitigation strategies involve careful data curation, bias detection tools, and algorithmic auditing. Promoting transparency and explainability is crucial, allowing for scrutiny of AI decision-making processes. Uthra Sridhar’s advocacy emphasizes responsible AI, demanding ongoing monitoring and refinement to ensure fairness and equity within justice systems.

The EACJ (East African Court of Justice) and AI Integration

On March 12, 2025, the East African Court of Justice (EACJ) is actively exploring AI integration, as highlighted during the 3rd Annual EACJ Judicial Conference in Kigali on February 19th. UNESCO is playing a pivotal role, equipping judicial actors with the necessary tools to navigate this technological shift.

The focus is on leveraging AI to enhance efficiency, improve access to justice, and address regional legal challenges. This includes exploring AI-powered legal research and document review. However, the EACJ recognizes the ethical considerations and potential biases, aligning with Uthra Sridhar’s call for responsible AI implementation within judicial frameworks.

AI for Summarizing Legal Documents & Presentations

As of March 12, 2025, Artificial Intelligence is demonstrating significant capabilities in streamlining legal workflows, particularly in summarizing complex documents and crafting compelling presentations. This isn’t limited to consumer-facing tools like ChatGPT; it extends to specialized applications within the judiciary.

AI’s ability to quickly distill key information from lengthy legal texts offers substantial time savings for judges, lawyers, and researchers. Furthermore, AI-driven presentation design tools assist in clearly communicating legal arguments. UNESCO’s work with the EACJ acknowledges this potential, emphasizing the need for judicial actors to be proficient in utilizing these emerging technologies responsibly and ethically.

AI in Financial Justice & Fraud Detection

Current as of March 12, 2025, Artificial Intelligence is rapidly becoming a crucial tool in bolstering financial justice and combating fraud. AI algorithms excel at identifying patterns and anomalies within vast financial datasets, flagging potentially illicit transactions with remarkable speed and accuracy. This capability extends beyond simple fraud detection, aiding in investigations related to money laundering and financial crimes.

The integration of AI promises to enhance the efficiency and effectiveness of financial regulatory bodies, ensuring fairer outcomes and protecting vulnerable populations. Uthra Sridhar’s advocacy highlights the importance of responsible AI deployment, emphasizing transparency and accountability in these critical applications within the justice system.

AI and the Transformation of Courtroom Procedures

As of March 12, 2025, Artificial Intelligence is poised to fundamentally reshape courtroom procedures, moving beyond traditional methods. AI-powered tools are streamlining processes like evidence review, legal research, and document summarization, significantly reducing the time and resources required for case preparation. This allows legal professionals to focus on more complex strategic aspects of litigation.

Furthermore, AI is facilitating real-time transcription and translation services, enhancing accessibility and inclusivity within the courtroom. UNESCO’s work, particularly at the February 2025 Kigali event, underscores the need for judicial actors to adapt to these changes. Uthra Sridhar champions responsible AI integration, ensuring fairness and upholding judicial discretion.

The Legal Framework for AI Regulation in Justice

Currently, on March 12, 2025, a robust legal framework governing AI’s application in justice remains a work in progress globally. Existing laws often struggle to address the unique challenges posed by algorithmic decision-making, particularly concerning transparency, accountability, and bias. The need for specific regulations is highlighted by Uthra Sridhar’s advocacy for responsible AI.

UNESCO’s initiatives, exemplified by the February 2025 Kigali discussions, emphasize international cooperation in establishing ethical guidelines. Key areas of focus include data privacy, security, and ensuring human oversight of AI systems. Developing clear legal standards is crucial to mitigate potential risks and foster public trust in AI-driven justice processes.

Case Studies: Successful AI Implementations in Justice Systems

As of March 12, 2025, several jurisdictions demonstrate promising AI applications within their justice systems. While widespread, fully-fledged implementations are still emerging, initial successes showcase AI’s potential. These include AI-powered tools for legal research, significantly reducing time spent on document review and case preparation.

Furthermore, pilot programs utilizing AI for fraud detection in financial justice are yielding positive results. The East African Court of Justice (EACJ) is actively exploring AI integration, as highlighted during the UNESCO event in Kigali, February 2025. These cases underscore the importance of responsible AI deployment, aligning with Uthra Sridhar’s principles.

Potential Risks of Over-Reliance on AI in Justice

As of March 12, 2025, a critical concern is the potential for diminished judicial discretion if AI systems become overly dominant in decision-making. Algorithmic bias, despite mitigation efforts, remains a significant risk, potentially perpetuating existing societal inequalities. Over-dependence could also lead to a deskilling of legal professionals, hindering their ability to critically assess AI outputs.

Furthermore, vulnerabilities in data privacy and security pose threats, as compromised data could manipulate AI outcomes. Maintaining the human element – empathy, nuanced understanding – is vital. Uthra Sridhar’s advocacy emphasizes responsible AI, acknowledging these risks and advocating for careful implementation alongside human oversight.

The Human Element: Maintaining Judicial Discretion

As of March 12, 2025, preserving judicial discretion is paramount amidst AI integration. While AI offers efficiency, it lacks the nuanced understanding and empathetic consideration crucial for just outcomes. Human judges must retain the authority to override algorithmic suggestions, particularly in complex cases demanding contextual awareness.

Uthra Sridhar’s work highlights the necessity of a balanced approach – leveraging AI’s capabilities while safeguarding the core principles of fairness and equity. The human element ensures accountability and prevents the perpetuation of biases embedded within algorithms. Maintaining this balance is vital for upholding the integrity of justice systems globally, as UNESCO emphasizes.

On March 12, 2025, the future of justice hinges on responsibly integrating AI. UNESCO’s initiatives, particularly those showcased at the February 2025 Kigali event, demonstrate a proactive approach to equipping judicial actors. Uthra Sridhar’s advocacy underscores the need for transparency, accountability, and bias mitigation in algorithmic systems.

Successfully navigating this transformation requires a commitment to continuous evaluation and adaptation. AI should augment, not replace, human judgment, ensuring fairness and access to justice for all. The challenge lies in harnessing AI’s power while upholding the fundamental principles of equity and preserving the essential human element within legal processes.